Topic Modeling the Daily Princetonian

One of my favorite ways to waste time is reading internet comments. You can observe all kinds of angry people there and wonder at their pathologies. While I was still in school I particularly enjoyed reading the comments section of the Daily Princetonian because of its relevance to me. Though I only worked for a brief time formatting the print edition of the paper, I got to know some of the journalists, opinion writers, and editors through that work and through classes. Writing for a college newspaper requires a thick skin; the commenters are almost always out to get you.

At some point I decided I would try to learn something about internet news and comments, perhaps so I could convince myself all those hours were not wasted. A basic question we can ask about news and comments is what are the paper and readers talking about? This kind of question, of what topics underly a corpus, is what probabilistic topic modeling can help answer. A frequently used method for topic modeling is latent Dirichlet allocation (Blei, Ng, & Jordan, 2003). There have been many extensions to this original model, including some that focus on internet posts and their comments. In this post I describe a model introduced in (Yano, Cohen, & Smith, 2009) and apply it to a corpus of Daily Princetonian articles and their comments.

Data

I focus in particular on the opinion pieces and letters of the Daily Princetonian from fall 2013 to spring 2015–opinion pieces because in my experience these columns tend to attract the most, and the most rabid, comments, and that timespan because the Prince migrated its content to Wordpress in spring 2013 and all comments from before then have been lost. Using beautifulsoup and Selenium I scraped 962 articles from the opinion section with a total of 3576 comments. Using the tm package in R I removed capitalization, whitespace, numbers, and punctuation, stemmed the words using the Porter stemmer (Porter, 1980), and converted the documents to the bag of words representation required by Latent Dirichlet allocation. The data used in this analysis, as well the code for the model, is available at my repo comment_type_topic_model.

Model

(Yano, Cohen, & Smith, 2009) describe this model as LinkLDA. I do not use the other model they propose, CommentLDA, because it depends on commenter identity. The Disqus commenting system that the Prince uses allows guest commenters who can use anything as their usernames; thus it’s not possible to identify guests, who posted about 40% of the comments I consider here.

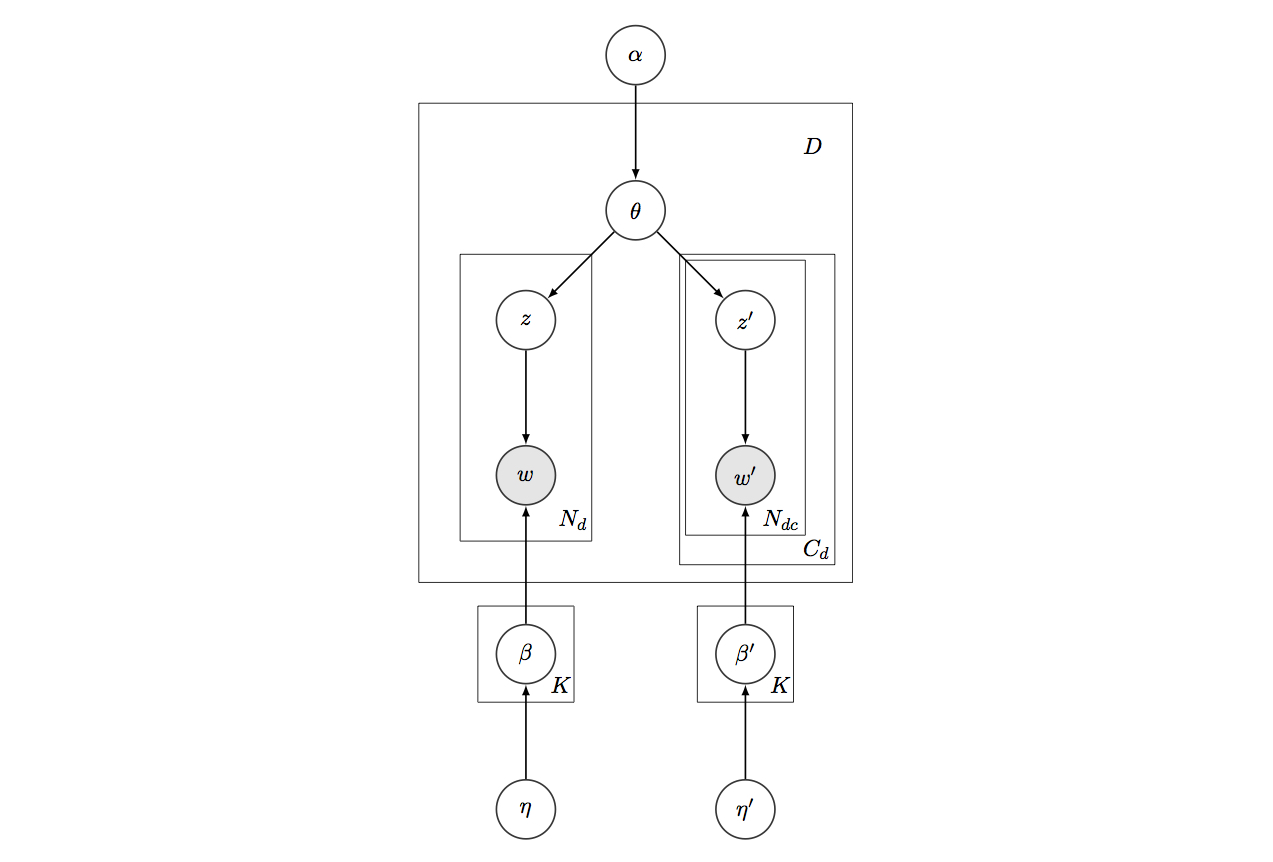

The generative process of the model is:

where is the th observed word in article , is the latent topic assignment of , is the topic proportion parameter of article , is the th observed word in comment in article , is the latent topic assignment of , is the article distribution over words of topic , is the comment distribution over words of topic , and , , and are the Dirichlet prior parameters for , , and , respectively.

And in plate notation:

I implemented a collapsed Gibbs sampler for the model with Rcpp.

Article and Comment Topics

At first I was going to choose the number of topics K in some disciplined way, like evaluating based on held out perplexity, but that requires various approximations and I got lazy. So I just settled on 40 topics. I ran 2000 iterations of the Gibbs sampler. I used the term score metric described in (Blei & Lafferty, 2009) to determine an order for topic visualization. What follows are 10 topics I found coherent and interesting. I list the words that are among the 20 highest by term score in both the article and comment versions of the topic to give a sense of topic identity. I also take the difference between article and comment term scores and list the words with the greatest difference to convey which words are most likely to appear in articles rather than in comments for the same topic. Likewise I use the difference between comment and article scores to illustrate which words are most likely to appear for comments rather than articles. I have labeled each topic myself, based on my own judgment.

| Both | israel, palestinian, jew, isra, jewish, peac, gaza, call |

| Article | divest, hillel, cjl, jewish, referendum, conflict, must, action, professor, compani, letter, petit, empha, faculti, support, gaza, profit, quo, two, falk |

| Comment | jew, arab, right, land, israel, world, kill, muslim, will, polici, bds, egypt, ethnic, govern, human, offer, open, clean, war, state |

| Both | club, bicker, eat, member, system, friend, join, hose, social, cultur, independ, altern |

| Article | referendum, vote, conver, eat, institut, process, prospect, altern, social, particip, sophomor, bicker, arbitrari, space, better, decid, end, major, measur, facilit |

| Comment | peopl, offer, princ, experi, sign, best, discuss, opinion, open, shame, affili, board, exclus, club, comment, late, aid, fix, guarant, hang |

| Both | sexism, joke, catcal, sexist, microaggress |

| Article | site, relationship, websit, can, reflect, woman, photo, user, facebook, internet, simpli, consum, exampl, favorit, christian, categori, frequent, isnt, shirt, microaggress |

| Comment | survey, cultur, offen, frank, taken, blatant, form, class, perpetu, uncomfort, term, trend, sexism, feel, harass, humor, impress, patriarchi, scale, bossi |

| Both | white, american, black, asian, privileg, race, minor, group, diver, racial, african |

| Article | experi, diver, american, percent, show, cultur, south, ident, role, defin, grow, asian, countri, person, fresh, famili, communiti, grant, identifi, recognit |

| Comment | admiss, white, privileg, check, like, male, fortgang, kid, newbi, phrase, look, poor, seek, bodi, racist, base, econom, nativ, conver, legaci |

| Both | drug, applic, system, justic, question, convict, admiss, punish, prison, marijuana |

| Article | past, admiss, access, candid, alcohol, violat, person, point, legal, privileg, ask, equal, inequ, level, standard, effect, result, scrutini, process, higher |

| Comment | princeton, reject, serv, crimin, offic, univ, drug, racial, stop, disadvantag, thousand, discriminatori, arrest, discrimin, sentenc, start, alreadi, freedom, non, sell |

| Both | princ, report, articl, news, paper, publish, opinion, write, stori, newspap, comment, journalist, editor |

| Article | inform, onlin, media, want, reader, public, communiti, sourc, stori, comment, section, communic, content, role, copi, editor, read, edit, tradit, writer |

| Comment | princ, name, student, piec, report, marcelo, critic, issu, email, insult, serious, dailyprincetoniancom, facebook, hatr, construct, independ, york, alleg, appear, deci |

| Both | student, health, mental, cps, help, campus |

| Article | suffer, stress, disea, freshmen, work, servic, percent, health, project, eat, psycholog, balanc, incom, disord, director, habit, serious, mental, dean, campus |

| Comment | princeton, univ, issu, administr, cps, come, forc, polici, provid, fear, suicid, time, best, need, communic, sign, student, lie, requir, share |

| Both | rape, sexual, assault, victim, sex, consent, campus, case |

| Article | sexual, violenc, polic, happen, perhap, show, affirm, must, stone, roll, harass, assault, justic, state, vanderbilt, investig, excus, violat, drink, feder |

| Comment | rape, women, men, fal, woman, evid, parti, accus, man, protect, forc, polici, feminist, drunk, sex, consequ, emot, innoc, behavior, charg |

| Both | major, human, art, studi, valu, histori, field, scienc |

| Article | fact, art, scienc, applic, concentr, explor, liber, scientif, scientist, safe, histori, engin, alumni, career, director, industri, note, warn, taught, technolog |

| Comment | use, physic, fair, stem, easi, lower, moment, major, pursu, complet, materi, choo, frosh, ongo, valu, author, integr, relax, subject, yeah |

| Both | parent, marriag, children, sex, gay, mother |

| Article | self, piec, dunham, hookup, train, admir, studi, tiger, relationship, live, capac, econom, age, critic, sex, correl, humor, success, good, increa |

| Comment | coupl, rai, kid, children, biolog, father, will, adopt, orphan, dad, civil, straight, mom, perman, commit, femal, govern, non, deni, logic |

The differences between the article and comment distributions for each topic are interesting but cryptic if you don’t read the Prince. I have read the Prince and can offer some insight. In the Israel-Palestine topic for instance, the articles are more likely to discuss events or initiatives related to the conflict, like referenda on whether to divest from Israeli companies. Meanwhile in the comments people focus on identities and emphasize conflict, usually by accusing Israel of being an oppressive apartheid state and accusing Muslims of being hateful killers who want to destroy Israel. In the sexual assault topic, the opinion writers usually decry rape culture, while in the comments people warn against false accusations of rape and blame the victim for being drunk or just regretting poor decisions. Generally the opinion writers are students and relatively liberal in outlook, while many commenters are not even students and often reactionary.

References

- Porter, M. F. (1980). An algorithm for suffix stripping. Program, 14(3), 130–137. Retrieved from http://dblp.uni-trier.de/db/journals/program/program14.html#Porter80

- Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent Dirichlet Allocation. J. Mach. Learn. Res., 3, 993–1022. Retrieved from http://dl.acm.org/citation.cfm?id=944919.944937

- Yano, T., Cohen, W. W., & Smith, N. A. (2009). Predicting Response to Political Blog Posts with Topic Models. In Proceedings of Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics (pp. 477–485). Stroudsburg, PA, USA: Association for Computational Linguistics. Retrieved from http://dl.acm.org/citation.cfm?id=1620754.1620824

- Blei, D. M., & Lafferty, J. D. (2009). Topic Models.